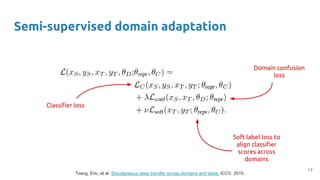

42 soft labels machine learning

UCI Machine Learning Repository: Mushroom Data Set WebIn Proceedings of the 5th International Conference on Machine Learning, 73-79. Ann Arbor, Michigan: Morgan Kaufmann. Duch W, Adamczak R, Grabczewski K (1996) Extraction of logical rules from training data using backpropagation networks, in: Proc. of the The 1st Online Workshop on Soft Computing, 19-30.Aug.1996, pp. 25-30, Support-vector machine - Wikipedia The soft-margin support vector machine described above is an example of an empirical risk minimization (ERM) algorithm for the hinge loss. Seen this way, support vector machines belong to a natural class of algorithms for statistical inference, and many of its unique features are due to the behavior of the hinge loss.

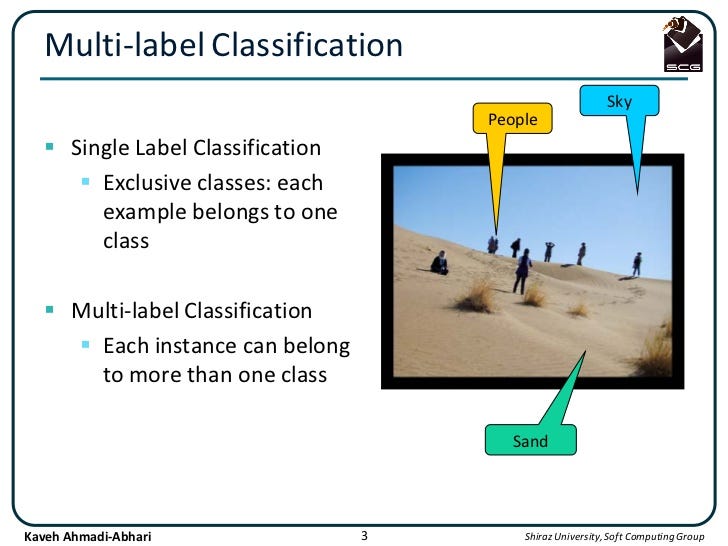

An introduction to MultiLabel classification - GeeksforGeeks To use those we are going to use the metrics module from sklearn, which takes the prediction performed by the model using the test data and compares with the true labels. Code: predicted = mlknn_classifier.predict (X_test_tfidf) print(accuracy_score (y_test, predicted)) print(hamming_loss (y_test, predicted))

Soft labels machine learning

Data Labeling Software: Best Tools for Data Labeling - neptune.ai In machine learning and AI development, the aspects of data labeling are essential. You need a structured set of training data that an ML system can learn from. It takes a lot of effort to create accurately labeled datasets. Data labeling tools come very much in handy because they can automate the labeling process, which […] What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels. innovation-cat/Awesome-Federated-Machine-Learning WebFederated Learning (FL) is a new machine learning framework, which enables multiple devices collaboratively to train a shared model without compromising data privacy and security. This repository aims to keep tracking the latest research advancements of federated learning, including but not limited to research papers, books, codes, tutorials, …

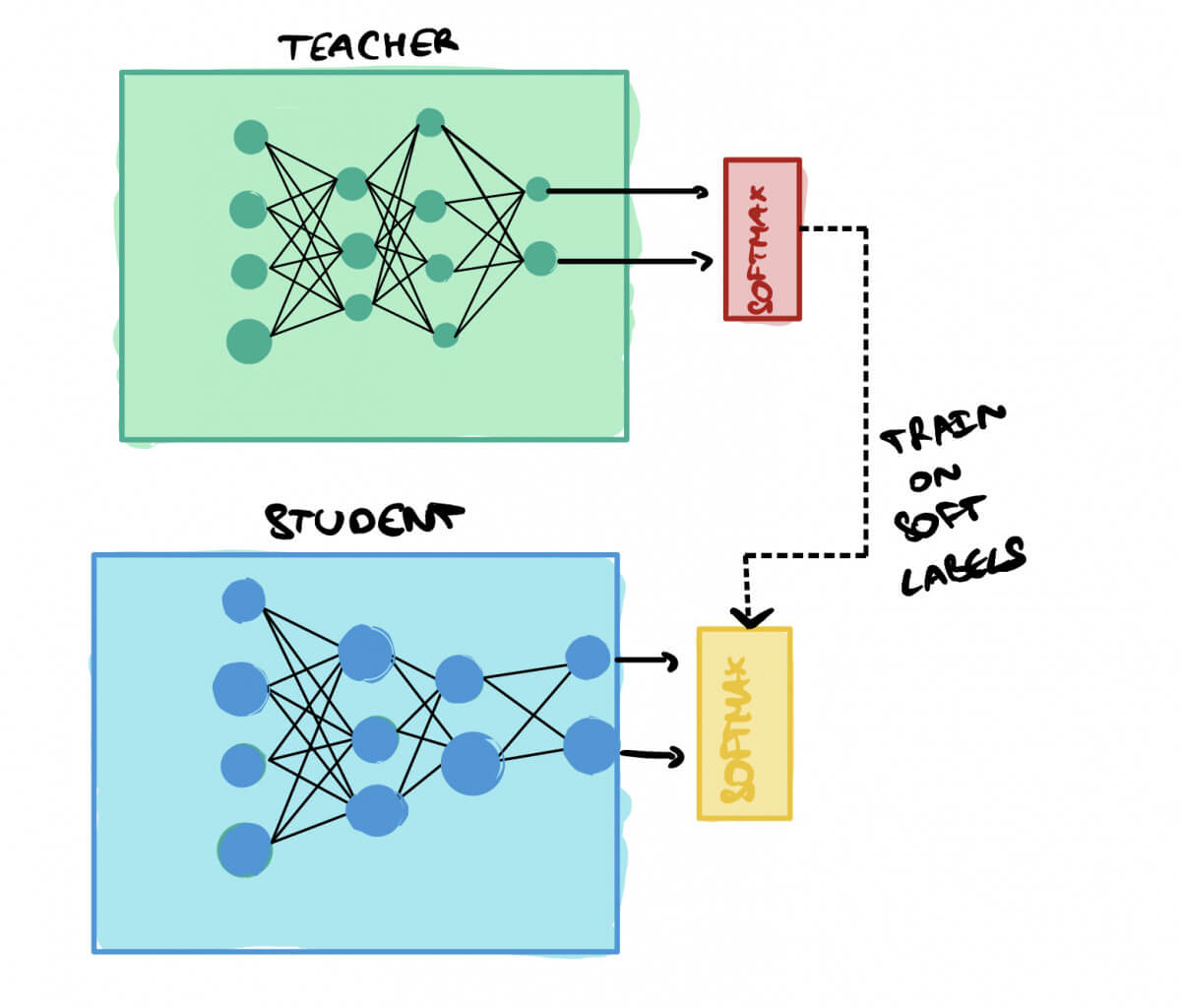

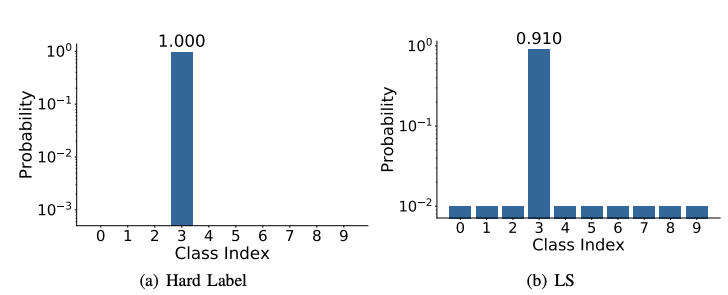

Soft labels machine learning. machine learning - What are soft classes? - Cross Validated You can't do that with hard classes, other than create two training instances with two different labels: x -> [1, 0, 0, 0, 0] x -> [0, 0, 1, 0, 0] As a result, the weights will probably bounce back and forth, because the two examples push them in different directions. That's when soft classes can be helpful. Label Smoothing - Lei Mao's Log Book In machine learning or deep learning, we usually use a lot of regularization techniques, such as L1, L2, dropout, etc., to prevent our model from overfitting. ... Label smoothing is a regularization technique for classification problems to prevent the model from predicting the labels too confidently during training and generalizing poorly. Guide to multi-class multi-label classification with neural networks in ... Often in machine learning tasks, you have multiple possible labels for one sample that are not mutually exclusive. This is called a multi-class, multi-label classification problem. Obvious suspects are image classification and text classification, where a document can have multiple topics. Both of these tasks are well tackled by neural networks. MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base classifier is trained by using these generated soft-labels. These iterations are repeated for each batch of training data.

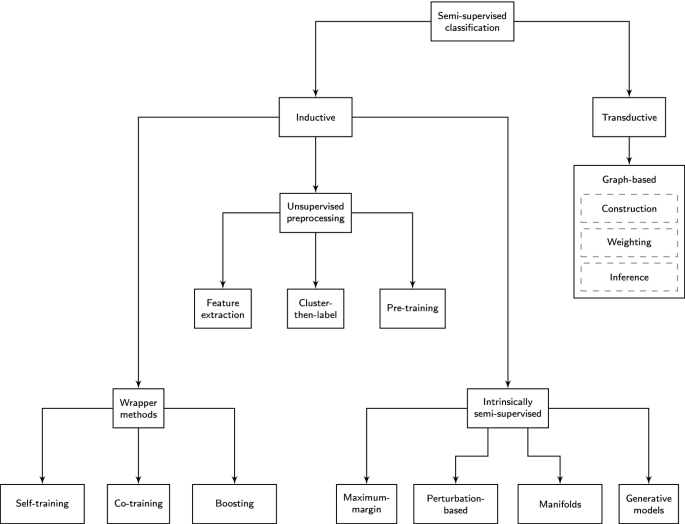

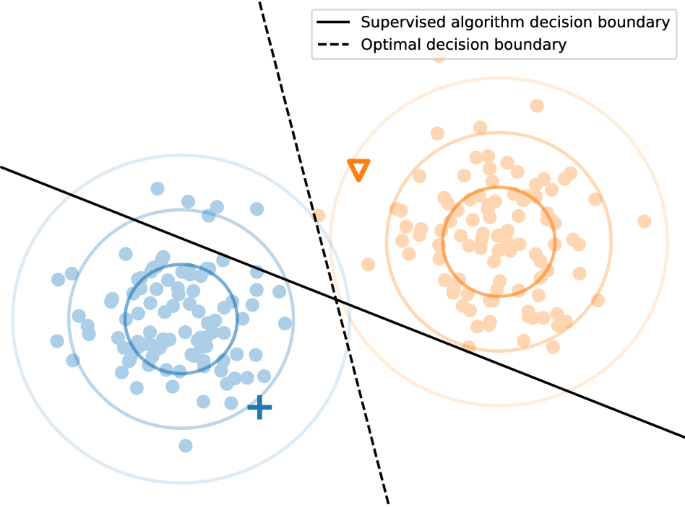

Semi-Supervised Learning With Label Propagation - Machine Learning Mastery Nodes in the graph then have label soft labels or label distribution based on the labels or label distributions of examples connected nearby in the graph. Many semi-supervised learning algorithms rely on the geometry of the data induced by both labeled and unlabeled examples to improve on supervised methods that use only the labeled data. Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability Is it okay to use cross entropy loss function with soft labels? The sum is taken over the set of possible class labels. In the case of 'soft' labels like you mention, the labels are no longer class identities themselves, but probabilities over two possible classes. Because of this, you can't use the standard expression for the log loss. But, the concept of cross entropy still applies. What is Data Labeling? | IBM Data labeling, or data annotation, is part of the preprocessing stage when developing a machine learning (ML) model. It requires the identification of raw data (i.e., images, text files, videos), and then the addition of one or more labels to that data to specify its context for the models, allowing the machine learning model to make accurate ...

Efficient Learning of Classification Models from Soft-label Information ... soft-label further refining its class label. One caveat of apply- ing this idea is that soft-labels based on human assessment are often noisy. To address this problem, we develop and test a new classification model learning algorithm that relies on soft-label binning to limit the effect of soft-label noise. We Machine Learning: Algorithms and Applications - ResearchGate Web13.07.2016 · Machine learning, one of the top emerging sciences, has an extremely broad range of applications. However, many books on the subject provide only a theoretical approach, making it difficult for a ... Intro to Audio Analysis: Recognizing Sounds Using Machine Learning Web12.09.2020 · This is the purpose of feature extraction (FE), the most common and important task in all machine learning and pattern recognition applications. FE is about extracting a set of features that are informative with respect to the desired properties of the original data. In our case, we are interested to extract audio features that are capable of discriminating … ARIMA for Classification with Soft Labels | by Marco Cerliani | Towards ... We have soft targets/labels p ∈ (0, 1) (make sure to clip the targets in [eps, 1 - eps] to avoid instability issues when we take logs). Then fit a regression model. Finally, to do inference, we take the sigmoid of the predictions from the regression model. Sigmoid: source Wikipedia

What is the difference between soft and hard labels? : r ... - reddit Hard Label = binary encoded e.g. [0, 0, 1, 0] Soft Label = probability encoded e.g. [0.1, 0.3, 0.5, 0.2] Soft labels have the potential to tell a model more about the meaning of each sample. r/learnmachinelearning Join • 3 days ago Free map to learn Reinforcement Learning up to DQN. Track progress, get explanations of each concept! 227 10

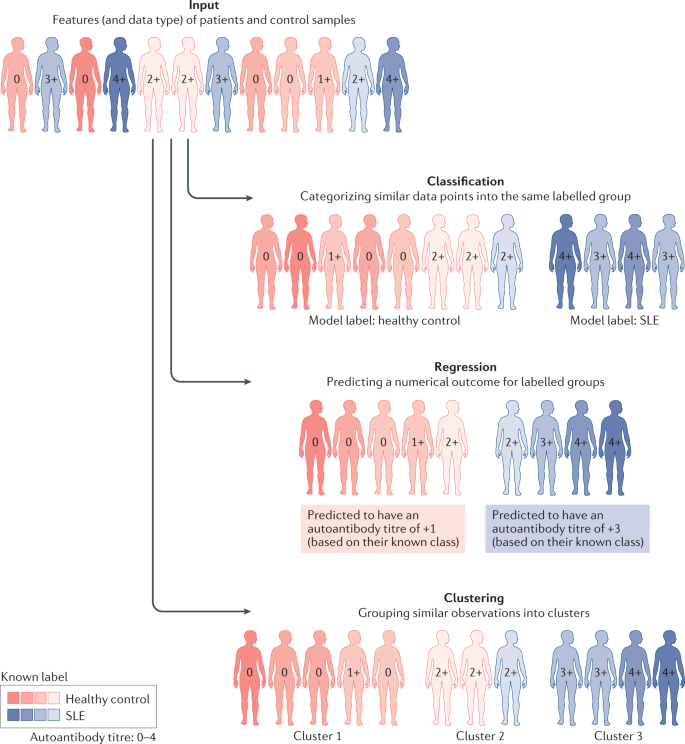

Learning classification models with soft-label information Materials and methods: Two types of methods that can learn improved binary classification models from soft labels are proposed. The first relies on probabilistic/numeric labels, the other on ordinal categorical labels. We study and demonstrate the benefits of these methods for learning an alerting model for heparin induced thrombocytopenia.

Machine Learning with Python - Algorithms - tutorialspoint.com WebThis machine learns from past experiences and tries to capture the best possible knowledge to make accurate business decisions. Markov Decision Process is an example of Reinforcement Learning. List of Common Machine Learning Algorithms. Here is the list of commonly used machine learning algorithms that can be applied to almost any data …

Multi-Class Neural Networks: Softmax | Machine Learning - Google Developers Multi-Class Neural Networks: Softmax. Recall that logistic regression produces a decimal between 0 and 1.0. For example, a logistic regression output of 0.8 from an email classifier suggests an 80% chance of an email being spam and a 20% chance of it being not spam. Clearly, the sum of the probabilities of an email being either spam or not spam ...

Blending Ensemble Machine Learning With Python Apr 27, 2021 · Blending is an ensemble machine learning algorithm. It is a colloquial name for stacked generalization or stacking ensemble where instead of fitting the meta-model on out-of-fold predictions made by the base model, it is fit on predictions made on a holdout dataset. Blending was used to describe stacking models that combined many hundreds of predictive […]

Pseudo Labelling - A Guide To Semi-Supervised Learning There are 3 kinds of machine learning approaches- Supervised, Unsupervised, and Reinforcement Learning techniques. Supervised learning as we know is where data and labels are present. Unsupervised Learning is where only data and no labels are present. Reinforcement learning is where the agents learn from the actions taken to generate rewards.

Soft Labels - Etsy Clear Stamp - Transparent Silicone Stamp - Soft Rubber Stamp - For DIY Planner, Journal, Scrapbooking, Deco, Filofax - Love - EM65590. mieryaw. (5,630) $5.60. More colors. Custom clothes tags - laser cut from soft leatherette "vegan leather". Your name and design label to sew onto your creations! 5 colours!

Regression - Features and Labels - Python Programming You have a few choice here regarding how to handle missing data. You can't just pass a NaN (Not a Number) datapoint to a machine learning classifier, you have to handle for it. One popular option is to replace missing data with -99,999. With many machine learning classifiers, this will just be recognized and treated as an outlier feature.

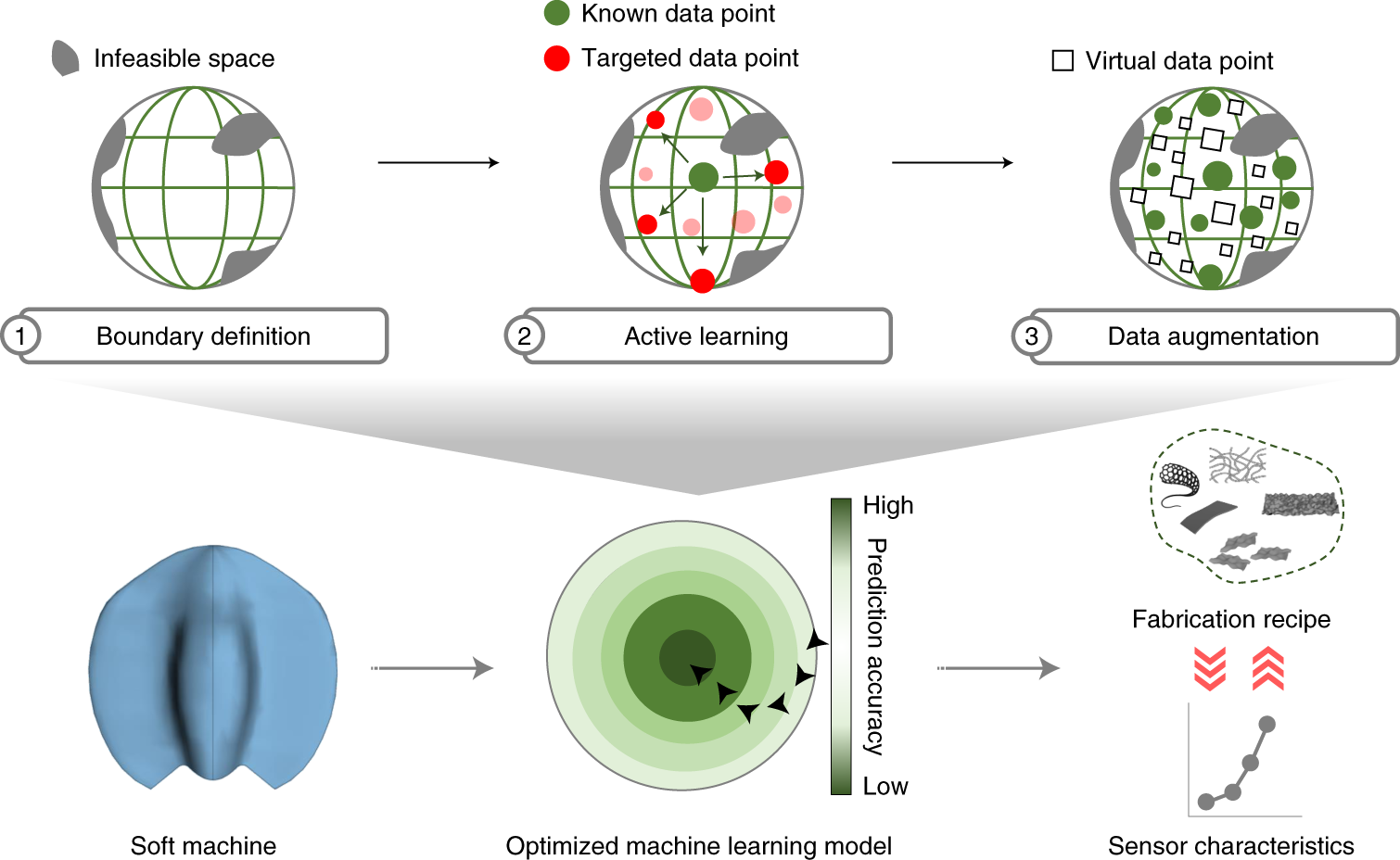

PDF Efficient Learning with Soft Label Information and Multiple Annotators Note that our learning from auxiliary soft labels approach is complementary to active learning: while the later aims to select the most informative examples, we aim to gain more useful information from those selected. This gives us an opportunity to combine these two 3 approaches. 1.2 LEARNING WITH MULTIPLE ANNOTATORS

How to Use Out-of-Fold Predictions in Machine Learning Machine learning algorithms are typically evaluated using resampling techniques such as k-fold cross-validation. During the k-fold cross-validation process, predictions are made on test sets comprised of data not used to train the model. These predictions are referred to as out-of-fold predictions, a type of out-of-sample predictions.

Machine learning - Wikipedia WebMachine learning (ML) ... Some of the training examples are missing training labels, yet many machine-learning researchers have found that unlabeled data, when used in conjunction with a small amount of labeled data, can produce a considerable improvement in learning accuracy. In weakly supervised learning, the training labels are noisy, limited, …

Unsupervised Machine Learning: Examples and Use Cases WebUnsupervised machine learning is the process of inferring underlying hidden patterns from historical data. Within such an approach, a machine learning model tries to find any similarities, differences, patterns, and structure in data by itself. No prior human intervention is needed. Let’s get back to our example of a child’s experiential learning. Picture a …

Learning Soft Labels via Meta Learning - Apple Machine Learning Research The learned labels continuously adapt themselves to the model's state, thereby providing dynamic regularization. When applied to the task of supervised image-classification, our method leads to consistent gains across different datasets and architectures. For instance, dynamically learned labels improve ResNet18 by 2.1% on CIFAR100.

Creating targets for machine learning labels - Python Programming This function will take any ticker, create the needed dataset, and create our "target" column, which is our label. The target column will have either a -1, 0, or 1 for each row, based on our function and the columns we feed through. Now, we can get the distribution:

python - scikit-learn classification on soft labels - Stack Overflow Generally speaking, the form of the labels ("hard" or "soft") is given by the algorithm chosen for prediction and by the data on hand for target. If your data has "hard" labels, and you desire a "soft" label output by your model (which can be thresholded to give a "hard" label), then yes, logistic regression is in this category.

Label Smoothing — Make your model less (over)confident Label smoothing is often used to increase robustness and improve classification problems. Label smoothing is a form of output distribution regularization that prevents overfitting of a neural network by softening the ground-truth labels in the training data in an attempt to penalize overconfident outputs. The intuition behind label smoothing is ...

Features and labels - Module 4: Building and evaluating ML models ... This module explores the various considerations and requirements for building a complete dataset in preparation for training, evaluating, and deploying an ML model. It also includes two demos—Vision API and AutoML Vision—as relevant tools that you can easily access yourself or in partnership with a data scientist.

[2009.09496] Learning Soft Labels via Meta Learning - arXiv.org Learning Soft Labels via Meta Learning Nidhi Vyas, Shreyas Saxena, Thomas Voice One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

How to Label Data for Machine Learning: Process and Tools - AltexSoft Data labeling (or data annotation) is the process of adding target attributes to training data and labeling them so that a machine learning model can learn what predictions it is expected to make. This process is one of the stages in preparing data for supervised machine learning.

Understanding Deep Learning on Controlled Noisy Labels - Google AI Blog In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

innovation-cat/Awesome-Federated-Machine-Learning WebFederated Learning (FL) is a new machine learning framework, which enables multiple devices collaboratively to train a shared model without compromising data privacy and security. This repository aims to keep tracking the latest research advancements of federated learning, including but not limited to research papers, books, codes, tutorials, …

What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

Data Labeling Software: Best Tools for Data Labeling - neptune.ai In machine learning and AI development, the aspects of data labeling are essential. You need a structured set of training data that an ML system can learn from. It takes a lot of effort to create accurately labeled datasets. Data labeling tools come very much in handy because they can automate the labeling process, which […]

![PDF] Making the most of small Software Engineering datasets ...](https://d3i71xaburhd42.cloudfront.net/c65dcc599f4539295c83b8d12f7bbf9b361e4697/8-Table2-1.png)

![PDF] Utilizing Knowledge Distillation in Deep Learning for ...](https://d3i71xaburhd42.cloudfront.net/21424b25b9718a13a3ce01c8d1a828ac01655a4a/5-Figure1-1.png)

Post a Comment for "42 soft labels machine learning"